At CES 2025, NVIDIA CEO Jensen Huang, known for his signature leather jackets, took the stage at the Michelob Ultra Arena to share the company's bold new vision: blending artificial intelligence with the physical world. This wasn't just another product launch; it was a defining moment in tech history, marking the shift from generative AI to what Huang calls "physical AI."

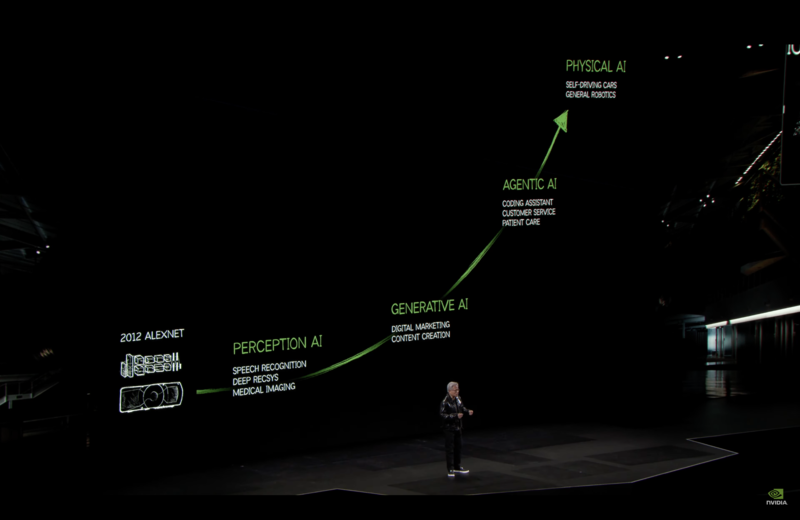

The Three Waves of AI

In front of a crowd of over 6,000, Huang traced the journey of AI. "We started with perception AI — understanding images, words, and sounds. Then came generative AI — creating text, images, and sound," he said, before introducing the next big thing: "physical AI, AI that can perceive, reason, plan, and act."

This isn't just theory; it's the real-world evolution of AI's capabilities, expanding its impact across various industries. Each wave has built on the last, opening up new opportunities and challenges:

| AI Wave | Key Capabilities | Real-World Impact |

|---|---|---|

| Perception AI | Understanding sensory input | Computer vision, speech recognition, natural language processing |

| Generative AI | Creating content from prompts | ChatGPT, DALL-E, text-to-speech, code generation |

| Physical AI | Interacting with the real world | Robotics, autonomous vehicles, industrial automation |

The keynote unveiled five major initiatives that showcase NVIDIA's comprehensive approach to this new era:

- NVIDIA Cosmos: A world foundation model platform for physical AI development

- RTX 50 Series: Next-generation graphics cards powered by the Blackwell architecture

- Project DIGITS: A pocket-sized AI supercomputer for developers

- DRIVE Thor: Advanced autonomous vehicle computing platform

- Llama Nemotron: Enterprise-grade AI models for business applications

The Foundation of Modern AI

Huang took a moment to reflect on NVIDIA's journey, starting from the company's founding in 1993. The path from the first programmable GPU in 1999 to today's AI revolution is marked by several crucial milestones:

"We wanted to build computers that can do things that normal computers couldn't," Huang recalled, describing the company's initial mission. "And now, 30 years later, we're witnessing a fundamental transformation in how computing works."

This transformation is evident in the numbers. The latest AI models process hundreds of billions of parameters, requiring computational power that would have been unimaginable just a decade ago. NVIDIA's response to this demand has been comprehensive, spanning three critical areas:

Three Computing Paradigms for the AI Era

- Training Infrastructure: Massive GPU clusters in data centers for developing AI models

- Inference Systems: Optimized platforms for deploying AI in real-world applications

- Development Tools: Software frameworks and platforms that enable AI creation and deployment

The Three Laws of AI Scaling

During the keynote, Huang introduced what he calls the "three laws of AI scaling" that are driving the industry forward:

1. Pre-training Scaling Law

The traditional scaling law shows that model capability increases with:

- Larger datasets

- Bigger models

- More compute power

2. Post-training Scaling Law

This newer paradigm focuses on:

- Reinforcement learning from human feedback

- Fine-tuning for specific domains

- Synthetic data generation

3. Test-time Scaling Law

The latest frontier in AI optimization:

- Dynamic resource allocation during inference

- Multi-step reasoning processes

- Internal reflection and self-improvement

"These scaling laws are driving enormous demand for NVIDIA computing," Huang explained. "Intelligence is the most valuable asset we have, and it can be applied to solve a lot of very challenging problems."

A New Chapter in Computing

The significance of this keynote extends far beyond individual product announcements. It represents NVIDIA's vision of a future where AI transcends the digital realm to become an active participant in the physical world. This transition brings both unprecedented opportunities and responsibilities:

| Domain | Current State | Future Vision |

|---|---|---|

| Computing | Primarily digital processing | Physical world interaction |

| AI Development | Specialized expertise required | Democratized access |

| Applications | Content generation | Physical world automation |

| Infrastructure | Centralized data centers | Distributed AI computing |

As Huang emphasized throughout the presentation, this shift requires a fundamental rethinking of how we approach computing. "AI was not just a new application with a new business opportunity," he noted. "AI, more importantly machine learning enabled by Transformers, was going to fundamentally change how Computing works."

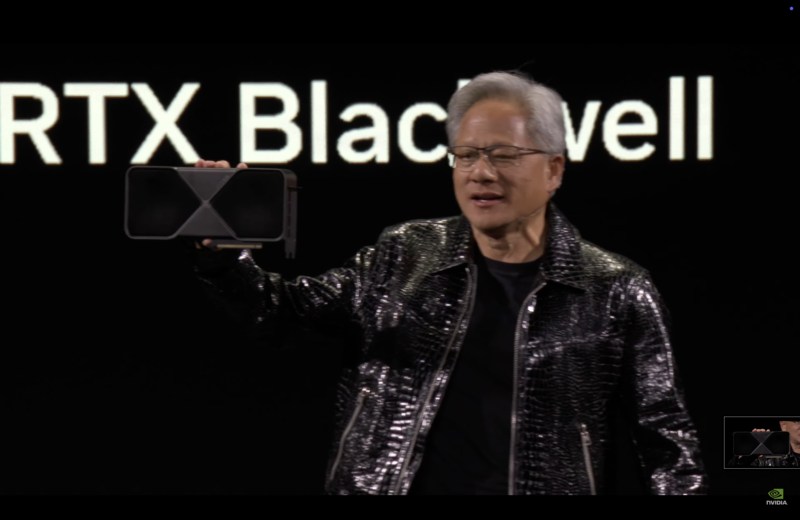

The Next Generation of Consumer Graphics: RTX 50 Series

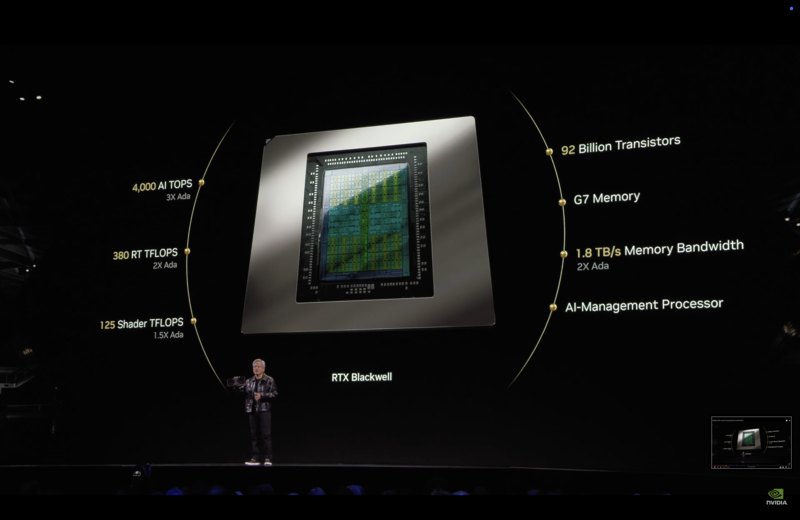

The announcement of NVIDIA's RTX 50 Series GPUs marks a significant evolution in consumer graphics technology, but more importantly, it represents the convergence of traditional graphics processing with AI acceleration. Based on the new Blackwell architecture, these GPUs aren't just incremental improvements—they represent a fundamental shift in how graphics processing works.

Technical Specifications and Breakthroughs

The flagship RTX 5090 showcases impressive specifications that set new standards for consumer graphics:

| Feature | RTX 5090 Specification | RTX 4090 Specification |

|---|---|---|

| NVIDIA Architecture | Blackwell | Ada Lovelace |

| DLSS | DLSS 4 | DLSS 3 |

| AI TOPS | 3352 | 1321 |

| Tensor Cores | 5th Gen | 4th Gen |

| Ray Tracing Cores | 4th Gen | 3rd Gen |

| NVIDIA Encoder (NVENC) | 3x 9th Gen | 2x 8th Gen |

| NVIDIA Decoder (NVDEC) | 2x 6th Gen | 1x 5th Gen |

| Memory Configuration | 32 GB GDDR7 | 24 GB GDDR6X |

| Memory Bandwidth | 1792 GB/sec | 1008 GB/sec |

The AI-Powered Graphics Revolution

What makes the RTX 50 Series truly revolutionary is its approach to rendering. As Huang demonstrated, the GPUs use AI in unprecedented ways:

Neural Rendering Pipeline

- DLSS 4 can generate three additional frames for every rendered frame

- AI handles spatial and temporal upscaling simultaneously

- New architecture allows for concurrent shader operations for floating point and integer calculations

Key AI-Enhanced Features

| Feature | Description | Impact |

|---|---|---|

| RTX Neural Shaders | AI-optimized texture and material processing | More realistic surfaces with less performance impact |

| RTX Neural Faces | Advanced facial animation and rendering | More lifelike character expressions |

| RTX Mega Geometry | AI-enhanced geometry processing | Up to 100x more geometric detail |

| Neural Texture Compression | AI-optimized texture storage | Better visual quality with smaller memory footprint |

Product Lineup and Availability

NVIDIA announced a comprehensive lineup of RTX 50 Series cards:

| Model | Price | Availability | Relative Performance* |

|---|---|---|---|

| RTX 5090 | $1,599 | January 30 | 2x RTX 4090 |

| RTX 5080 | $999 | January 30 | 1.7x RTX 4080 |

| RTX 5070 Ti | $799 | February | 1.5x RTX 4070 Ti |

| RTX 5070 | $599 | February | 1.4x RTX 4070 |

*Performance metrics based on NVIDIA's internal testing with DLSS 4 enabled

Mobile Graphics Revolution

Perhaps even more impressive than the desktop cards is NVIDIA's laptop GPU lineup. Through clever use of AI-powered rendering and next-generation efficiency optimizations, NVIDIA has managed to fit unprecedented power into thin laptops:

| Laptop GPU | Notable Feature | Target Laptop Thickness |

|---|---|---|

| RTX 5090 Laptop | Desktop 4090-class performance | 14.9mm |

| RTX 5080 Laptop | Advanced creator capabilities | 16.8mm |

| RTX 5070 Ti Laptop | Professional workstation graphics | 18mm |

| RTX 5070 Laptop | Mainstream gaming performance | 19.9mm |

The Role of AI in Modern Graphics

The RTX 50 Series represents more than just a new generation of graphics cards—it's a glimpse into the future of visual computing. As Huang explained during the keynote:

"The future of computer Graphics is neural rendering, the fusion of artificial intelligence and computer graphics."

This fusion is evident in how the new GPUs process graphics:

- Traditional rasterization and ray tracing handle core rendering

- AI upscaling and frame generation multiply effective performance

- Neural networks optimize texture and geometry processing

- Machine learning manages resource allocation in real-time

Real-World Impact

The practical implications of this technology are significant. In a live demonstration, NVIDIA showed:

- Real-time ray tracing at 4K resolution with frame rates exceeding 144 FPS

- AI-generated high-quality frames reducing actual render load by 75%

- Dynamic resolution scaling without visible quality loss

- Unprecedented geometry complexity in real-time rendering

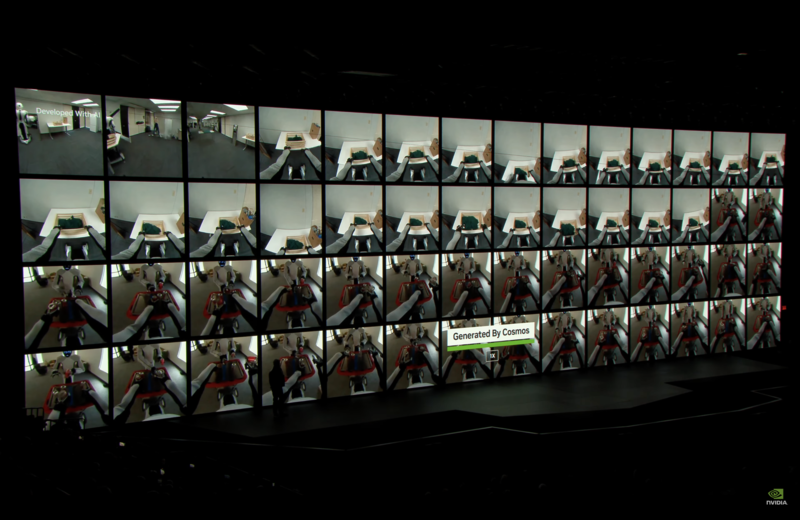

NVIDIA Cosmos: The Foundation for Physical AI

In what may be the keynote's most significant announcement, NVIDIA introduced Cosmos, describing it as "the world's first world foundation model platform." This platform represents NVIDIA's bold push into physical AI, aiming to do for robotics and autonomous systems what large language models did for natural language processing.

Understanding World Foundation Models

Cosmos introduces a new paradigm in AI development through what NVIDIA calls "world foundation models" (WFMs). These models differ from traditional AI models in several key ways:

| Aspect | Traditional AI Models | World Foundation Models |

|---|---|---|

| Input Types | Usually single modality | Multi-modal (text, video, sensor data) |

| Understanding | Abstract/symbolic | Physics-based/spatial |

| Output | Digital content | Physical world interactions |

| Training Data | Internet content | Real-world physics & interactions |

| Primary Use | Content generation | Robot & AV development |

Core Components of Cosmos

The platform comprises several revolutionary components:

1. Data Processing Pipeline

- NVIDIA AI and CUDA-accelerated

- Processes 20 million hours of video in 14 days

- Previously required 3+ years on CPU-only systems

- Powered by NVIDIA NeMo Curator

2. Advanced Tokenizers

The NVIDIA Cosmos Tokenizer brings significant improvements:

| Metric | Improvement |

|---|---|

| Compression | 8x higher than current tokenizers |

| Processing Speed | 12x faster processing |

| Token Types | Supports visual, spatial, and temporal tokens |

3. Model Architecture

Two complementary model types:

- Autoregressive models for real-time applications

- Diffusion-based models for high-quality generation

Real-World Applications

Huang demonstrated several groundbreaking applications of Cosmos:

Video Understanding & Search

- Intelligent scenario identification

- Physics-aware search capabilities

- Real-time event detection

Synthetic Data Generation

- Physics-based photoreal video generation

- Environmental variation creation

- Edge case scenario development

Physical AI Development

- Model training and evaluation

- Reinforcement learning environments

- Performance testing

Multiverse Simulation

- Future outcome prediction

- Path planning optimization

- Safety validation

Early Adoption and Industry Impact

The platform has already garnered significant industry support, with early adopters including:

| Sector | Companies | Applications |

|---|---|---|

| Robotics | 1X, Agile Robots, Figure AI | Humanoid robot development |

| Automotive | Waabi, Wayve, Foretellix | AV software development |

| Ridesharing | Uber | Autonomous mobility |

| Manufacturing | XPENG, Hillbot | Industrial automation |

Integration with Omniverse

A key strength of Cosmos lies in its integration with NVIDIA's Omniverse platform:

"When you connect Cosmos to Omniverse, it provides the grounding, the ground truth that can control and condition the Cosmos generation," Huang explained. "As a result, what comes out of Cosmos is grounded on truth."

This integration enables:

- Physics-based simulation validation

- Real-time scenario generation

- Digital twin development

- Synthetic data creation

Training Data Revolution

One of the most significant aspects of Cosmos is its approach to training data generation. The platform was trained on:

- 20 million hours of video

- Focus on physical dynamics

- Real-world interactions

- Natural phenomena

- Human motion and manipulation

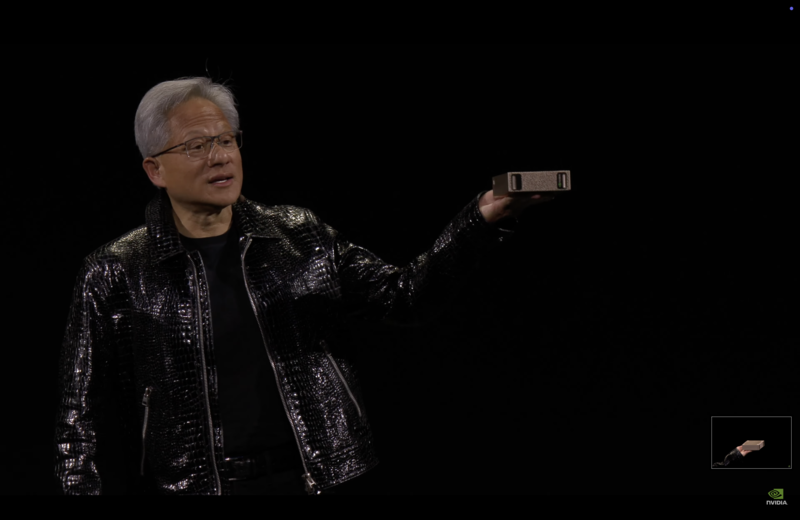

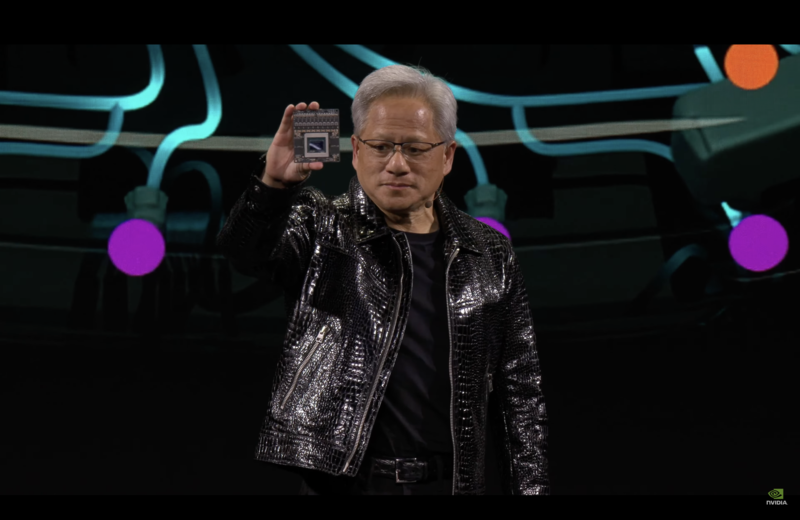

Project DIGITS: AI Supercomputing in Your Pocket

In perhaps the keynote's most theatrical moment, Jensen Huang reached into his pocket to reveal what he called "NVIDIA's latest AI supercomputer" - Project DIGITS. This compact device represents a remarkable engineering achievement: bringing the power of NVIDIA's Grace Blackwell architecture to a form factor that can literally fit in a jacket pocket.

The Evolution of AI Computing

To understand the significance of Project DIGITS, Huang reflected on NVIDIA's journey in AI computing:

| Era | Product | Impact |

|---|---|---|

| 2016 | DGX-1 | First AI supercomputer, delivered to OpenAI |

| 2020 | A100 | Enterprise AI acceleration |

| 2023 | H100 | Transformer optimization |

| 2025 | Project DIGITS | Personal AI supercomputing |

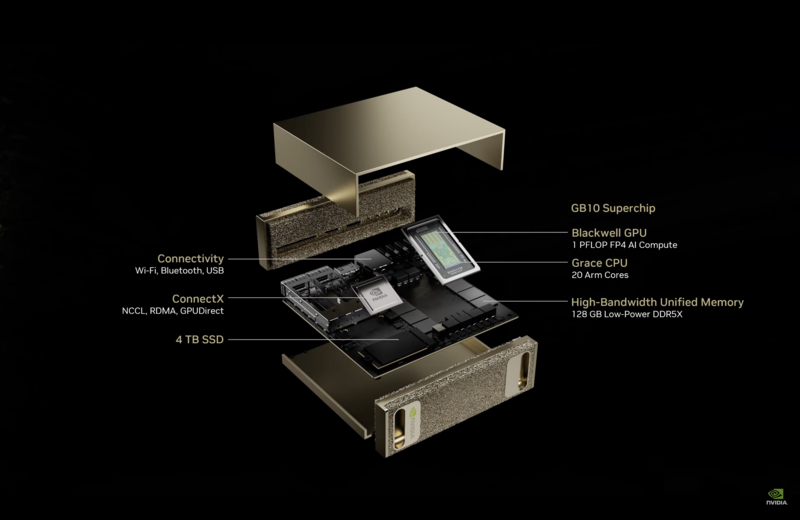

Technical Specifications

At the heart of Project DIGITS lies the GB10 Grace Blackwell Superchip, developed in collaboration with MediaTek:

| Component | Specification |

|---|---|

| GPU | GB10 Blackwell |

| CPU | NVIDIA Grace (ARM-based) |

| Memory | 128GB unified memory |

| Storage | Up to 4TB NVMe |

| Power Consumption | Standard outlet compatible |

| Form Factor | Pocket-sized |

| Model Capacity | Up to 200B parameters |

Interconnect and Scalability

One of the most impressive aspects of Project DIGITS is its scalability:

Single unit capabilities:

- 200B parameter models

- 1 petaflop AI performance

- Real-time inference

Dual unit configuration:

- 405B parameter models

- NVIDIA ConnectX networking

- GPU Direct RDMA support

Software Ecosystem

Project DIGITS runs on a complete AI software stack:

| Component | Description |

|---|---|

| Operating System | NVIDIA DGX OS (Linux-based) |

| Development Tools | Full NVIDIA AI Enterprise stack |

| Frameworks | PyTorch, Python, Jupyter support |

| Libraries | NVIDIA RAPIDS, NeMo framework |

| Services | NVIDIA NIM microservices |

| Cloud Integration | DGX Cloud compatible |

Target Applications

The device is designed to support a wide range of AI development scenarios:

Model Development

- Prototyping large language models

- Fine-tuning existing models

- Testing and validation

Edge Computing

- Real-time inference

- Local AI processing

- Privacy-preserving computation

Education and Research

- Academic AI research

- Student development

- Research prototyping

Enterprise Development

- Proof-of-concept development

- Application testing

- Local development and testing

The GB10 Breakthrough

"This little thing here is in full production," Huang announced, highlighting the GB10 chip's significance. "We did it in collaboration with MediaTek... they worked with us to build this CPU SoC and connect it with chip-to-chip NVLink to the Blackwell GPU."

The GB10 chip represents several engineering breakthroughs:

| Feature | Innovation |

|---|---|

| Integration | Combined CPU and GPU on single chip |

| Connectivity | Chip-to-chip NVLink |

| Power Efficiency | Standard outlet operation |

| Thermal Design | Advanced cooling in compact form factor |

| Manufacturing | Full production ready |

Market Impact and Availability

Set to launch in May 2025 with a starting price of $3,000, Project DIGITS aims to democratize AI development:

| Market Segment | Impact |

|---|---|

| Individual Developers | Accessible AI supercomputing |

| Startups | Reduced infrastructure costs |

| Research Institutions | Local computation capability |

| Enterprise | Rapid prototyping and testing |

DRIVE Thor and the Future of Autonomous Mobility

"The autonomous vehicle revolution has arrived," Huang declared, before revealing a series of major announcements that positioned NVIDIA at the forefront of both automotive computing and robotics. At the center of these announcements was DRIVE Thor, NVIDIA's next-generation processor for autonomous vehicles, alongside significant partnerships with industry leaders.

DRIVE Thor: The Next Generation of Autonomous Computing

DRIVE Thor represents a 20x performance increase over its predecessor, Orin, which has become the de facto standard in autonomous vehicle computing:

| Feature | Specification | Improvement over Orin |

|---|---|---|

| Processing Power | 2000 TOPS | 20x increase |

| Memory Bandwidth | 1TB/s | 5x increase |

| Power Efficiency | 75 TOPS/W | 3x improvement |

| Sensor Processing | 500+ cameras/sensors | 10x increase |

| Safety Certification | ASIL-D | Industry highest |

Major Industry Partnerships

The keynote included several significant partnership announcements:

Toyota Collaboration

- World's largest automaker adopting DRIVE AGX platform

- Implementation of DriveOS operating system

- Focus on safety-certified autonomous capabilities

- Production timeline starting 2025

Additional Automotive Partners

| Manufacturer | Implementation | Timeline |

|---|---|---|

| Mercedes-Benz | DRIVE Hyperion | 2025 |

| BYD | Next-gen EVs | 2025 |

| JLR | Advanced driver assistance | 2025 |

| Lucid | Electric vehicle platform | 2026 |

| Volvo | Safety systems | 2025 |

DriveOS: Safety-First Approach

A major breakthrough announced was DriveOS achieving ASIL-D certification:

"DriveOS is now the first software-defined, programmable AI computer that has been certified up to ASIL-D, which is the highest standard of functional safety for automobiles," Huang emphasized.

Key certifications and standards:

- ISO 26262 compliance

- CUDA functional safety certification

- 15,000 engineering years of development

- Comprehensive testing and validation

The Three-Computer Approach

Huang outlined NVIDIA's strategic vision for autonomous vehicle development, centered around three essential computers:

| Computer | Purpose | Technology |

|---|---|---|

| Training (DGX) | AI model development | NVIDIA DGX SuperPOD |

| Simulation (OVX) | Testing and validation | Omniverse + Cosmos |

| Deployment (AGX) | In-vehicle computing | DRIVE Thor |

Robotics Revolution: Isaac Groot

Alongside automotive announcements, NVIDIA unveiled significant advances in robotics with the Isaac Groot platform:

Synthetic Motion Generation

- Human demonstration capture

- Motion multiplication

- Physics-based validation

- Domain randomization

Training Pipeline Innovation

| Stage | Technology | Improvement |

|---|---|---|

| Demonstration | Vision Pro teleop | 10x faster data collection |

| Multiplication | Groot Mimic | 100x data amplification |

| Generation | Groot Gen | 1000x scenario variation |

| Validation | Isaac Sim | Real-time testing |

Industry Applications

The combination of DRIVE Thor and Isaac Groot is enabling new applications across industries:

Autonomous Transportation

- Long-haul trucking with Aurora

- Urban mobility with Uber

- Last-mile delivery with Nuro

Industrial Robotics

- Warehouse automation

- Manufacturing processes

- Quality control systems

Humanoid Robotics

Several companies announced adoption of NVIDIA's platforms:

- Figure AI for general-purpose humanoids

- 1X for specialized applications

- Agile Robots for industrial use

The Path Forward

NVIDIA's automotive and robotics initiatives represent a comprehensive approach to physical AI:

Safety First

- Certified systems

- Redundant architecture

- Extensive validation

Scale Ready

- Production-grade solutions

- Global manufacturing

- Industry partnerships

Future Proof

- Software-defined platforms

- Regular updates

- Expandable capabilities

Enterprise AI: The Era of AI Agents

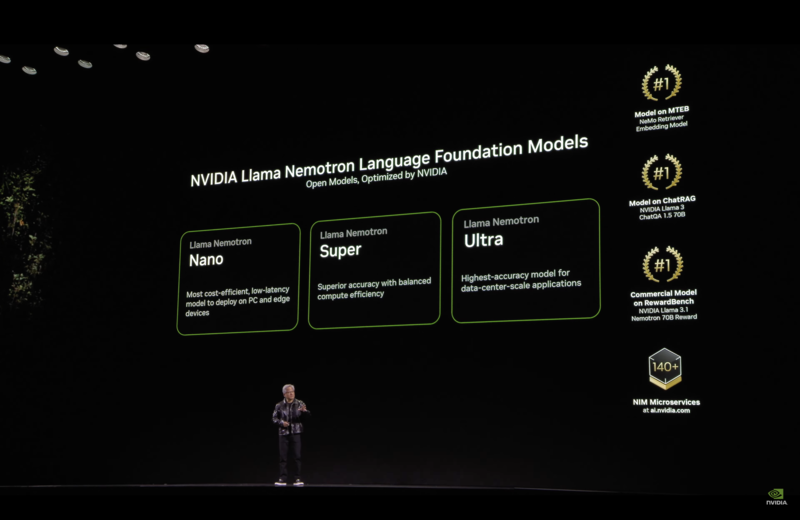

The final major segment of NVIDIA's keynote focused on enterprise AI, introducing the Llama Nemotron family of models and a comprehensive vision for agentic AI. This announcement represents NVIDIA's strategy to provide enterprises with the tools needed to develop and deploy AI agents across their operations.

Llama Nemotron Model Family

Building on the success of Meta's Llama 3.1, which has seen over 650,000 downloads, NVIDIA has created a specialized family of models optimized for enterprise use:

| Model Tier | Target Use Case | Capabilities |

|---|---|---|

| Nano | Edge deployment | Low-latency, real-time responses |

| Super | Single GPU deployment | High throughput, balanced performance |

| Ultra | Data center scale | Maximum accuracy, teacher model |

Key Features and Capabilities

The Llama Nemotron models introduce several innovations:

Performance Metrics

| Capability | Improvement Over Base Llama |

|---|---|

| Instruction Following | 2.1x better accuracy |

| Chat Performance | 1.8x response quality |

| Function Calling | 2.3x reliability |

| Code Generation | 1.9x accuracy |

| Math Processing | 2.4x precision |

Enterprise Integration

- Native NVIDIA NIM microservices support

- Built-in enterprise security features

- Seamless cloud deployment options

- Integration with existing IT infrastructure

Cosmos Nemotron Vision Language Models

Alongside the language models, NVIDIA introduced vision-language models specifically designed for enterprise use:

| Application | Capabilities |

|---|---|

| Video Analysis | Real-time content understanding |

| Visual Search | High-precision image matching |

| Document Processing | Multi-modal document analysis |

| Quality Control | Automated visual inspection |

The Three Pillars of Enterprise AI

NVIDIA's enterprise strategy centers around three key components:

1. NVIDIA NIMs (AI Microservices)

- Pre-packaged, optimized AI services

- Container-based deployment

- Cross-platform compatibility

- Enterprise-grade security

2. NVIDIA Nemo

- Digital employee onboarding

- Training and evaluation system

- Customization toolkit

- Performance monitoring

3. Enterprise Blueprints

- Industry-specific solutions

- Reference architectures

- Implementation guides

- Best practices

Real-World Applications

NVIDIA demonstrated several enterprise applications of their AI technology:

Knowledge Worker Support

- Interactive research assistants

- Document analysis and synthesis

- Automated report generation

- Meeting summarization

Industrial Applications

- Predictive maintenance

- Quality control

- Process optimization

- Supply chain management

Development Tools

- Code generation and review

- Architecture optimization

- Testing automation

- Documentation generation

Major Enterprise Partnerships

Several major companies announced adoption of NVIDIA's enterprise AI solutions:

| Company | Implementation | Use Case |

|---|---|---|

| SAP | Joule Integration | Enterprise process automation |

| ServiceNow | Platform AI | Workflow optimization |

| Cadence | Design Tools | Chip design automation |

| Siemens | Industrial AI | Manufacturing optimization |

Security and Compliance

NVIDIA emphasized their commitment to responsible AI development:

Built-in Safeguards

- Content filtering

- Bias detection

- Audit trails

- Access controls

Compliance Features

- GDPR compatibility

- SOC 2 compliance

- Industry certifications

- Data sovereignty support

Integration with Existing Systems

The enterprise solutions are designed to work seamlessly with:

| System Type | Integration Method |

|---|---|

| Cloud Services | Native API support |

| Data Centers | Container deployment |

| Edge Systems | Optimized inference |

| Development Tools | SDK integration |

Conclusion: NVIDIA's Vision for AI's Future

As Jensen Huang concluded his landmark CES 2025 keynote, the full scope of NVIDIA's vision became clear. This wasn't just a series of product announcements – it was a comprehensive blueprint for the next era of computing, where AI transitions from generating content on screens to actively shaping the physical world around us.

The Five Pillars of NVIDIA's Future

The keynote's major announcements form a cohesive strategy for AI's evolution:

| Pillar | Technology | Impact |

|---|---|---|

| Consumer AI | RTX 50 Series | Democratizing AI processing |

| Physical AI | Cosmos Platform | Enabling robotics and automation |

| Personal Computing | Project DIGITS | Bringing AI development to everyone |

| Autonomous Systems | DRIVE Thor | Revolutionizing transportation |

| Enterprise AI | Llama Nemotron | Transforming business operations |

Market Impact and Industry Transformation

NVIDIA's announcements are set to influence multiple industries:

Gaming and Creative Work

- Neural rendering becoming standard

- AI-enhanced content creation

- Real-time ray tracing at scale

Transportation and Robotics

- Autonomous vehicle acceleration

- Humanoid robot development

- Industrial automation advancement

Enterprise and Cloud Computing

- Agentic AI deployment

- Business process transformation

- AI-powered decision making

Looking Ahead: The Three Waves of AI

Huang's vision for AI's evolution is clear:

| Wave | Current Status | Next Steps |

|---|---|---|

| Perception AI | Mature | Integration into new domains |

| Generative AI | Rapidly evolving | Enterprise adoption |

| Physical AI | Beginning | Infrastructure development |

Challenges and Opportunities

As NVIDIA pushes the boundaries of AI, several key challenges and opportunities emerge:

Technical Challenges

- Power consumption optimization

- Safety certification

- Scale of computation

- Data quality and quantity

Industry Opportunities

- New business models

- Productivity improvements

- Innovation acceleration

- Market expansion

The Road Ahead

"The ChatGPT moment for robotics is coming," Huang predicted during the keynote. With the announcements at CES 2025, NVIDIA has laid the groundwork for this transformation.

Key developments to watch:

- Adoption of Cosmos across robotics companies

- Integration of Project DIGITS into development workflows

- Rollout of RTX 50 Series AI capabilities

- Deployment of DRIVE Thor in vehicles

- Enterprise implementation of Llama Nemotron

Final Thoughts

NVIDIA's CES 2025 keynote represents a pivotal moment in technology history. The company has not only showcased impressive technical achievements but has also outlined a clear vision for how AI will evolve from a tool that helps us create digital content to a technology that actively shapes our physical world.

As we look to the future, the implications of these announcements extend far beyond individual products or technologies. NVIDIA has essentially laid out a roadmap for the next decade of computing, where the boundaries between digital and physical worlds increasingly blur, and AI becomes an integral part of how we live, work, and interact with our environment.

The question is no longer if AI will transform our world, but how quickly and in what ways this transformation will occur. With these announcements, NVIDIA has positioned itself not just as a technology provider, but as an architect of our AI-driven future.

Written by [Author Name] on January 7, 2025

References:

- NVIDIA CES 2025 Keynote

- NVIDIA Press Releases (Project DIGITS)

- NVIDIA Blog: CES 2025 Summary

- Technical specifications from NVIDIA's product pages